There are team competitions and mixed teams, there are pairs and mixed pairs, there are individuals and ... Well, before we get to that, an aside.

Young people are struggling today. The Internet provides alternatives to personal connections that are compelling but leave people anxious and unable to get on with life. When I was young, long ago, I struggled with life, and I was saved by getting involved with Bridge. I married a Bridge player, and conversations about the game have been a nice aspect of our life that has never become boring after more than 50 years. Bridge seems to hit a sweet spot combining skills, co-operation, competition, uncertainty and the vicissitudes of good and bad luck. Perhaps a resurgence in our game could play a part in fixing the world's current malaise.

When I started there was a natural progression from simpler trick-taking games to rubber bridge to tournament bridge. This doesn't work nearly as well in the Internet age. Maybe Mixed Individual Bridge can be part of a different progression. Let's think about it.

The concept is actually very simple. We divide the field into two equal sets, which we suggestively label M and F. We then play a partial individual movement, but the M players are only North and West, and the F players are only East and South. There are then two results: one for the M players and one for the F players.

A two table match is the most convenient for some purposes. This has four M and four F players. We want each M player to partner each F player an equal number of times. We also want each M player to compare against each of the three other M players an equal number of times. So there has to be a multiple of 4 times 3 equals 12 rounds. It is also possible to do a 3 table mixed individual movement over 12 rounds with some imbalance, which might be convenient when the total number of tables is odd.

While a mixed individual will suit many, others would prefer non-mixed. And this will be necessary if there is an excess of M or of F players. This can be run the same way with an arbitrary or seeded split into North-West and East-South halves with separate winners. This allows these to be run alongside mixed competition.

I think it works best to run short matches of just 12 boards over 90 minutes or so. Then, if there is time for multiple such matches, there can be a swiss-style assignment of players to matches, with players finding their own level. Indeed I think it is possible to run a continuously updated ranking system in the style of that used in professional tennis, with people moving up when they finish ahead of those ranked higher. One would need to smooth the volatility, since this form of play will have a higher element of luck than we expect in pairs and teams competitions.

An essential feature to make this plan work is that there should be a fixed simple bidding system used by all. There is then no need for explanations or alerts. This also applies to leads and signals. The complexity of the prescribed system may vary with the players' level. At the lower levels the system should exclude all weak artificial bids, and psyching strong artificial bids should be forbidden.

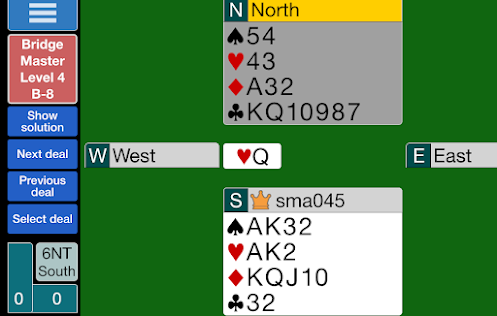

For a beginner's level, the hands should come with a predefined auction, together with an explanation of the bids in that auction. So then the game is just about the play of the cards, but with the information that an auction can provide.

I think this could become a new progression leading into the world of tournament Bridge, but I also think that this form of Bridge will suit many people. The advantages include: playing with different people, not needing the difficulties of partnership establishment and maintenance, nor the hassle of agreeing on a system and then remembering it.

Queries and explanations in Bridge slow the game down, but it is hard to assign the blame. With that eliminated there is the opportunity to introduce timing, as in chess. Just knowing who is slow will be a good start, before we even think about how to penalise slowness. While it could be done in a simple way, as in chess, another possibility is to automate it. A small computer with a camera (such as a Raspberry Pi), attached to something resembling a floor lamp, could look down on the table and see the play of bidding boxes and cards. This could produce a record of the play, and also record the time taken by each player.

Of course the natural way to start is for existing clubs to give it a try. I think their members will support the experiment if they catch a glimpse of the vision, which is to revitalise the game among younger people. After that we need to figure out how to expand to make it conveniently available to new and existing players.